Hi Pythonistas!

Today I'm leveling up my AI math toolkit with Softmax and a powerful trick called Temperature Scaling.

These two are everywhere in machine learning from choosing 'dog vs cat' to making chatbots less overconfident. L

et’s see how they work and why you’d want to use them!

Can a Computer Turn Scores Into Chances?

Ever wonder how a model decides which answer to pick and how confident it should be?

Suppose your model outputs these scores for an image:

Cat: 2.0

Dog: 1.0

Penguin: 0.1

Softmax steps in and flips these numbers into proper probabilities, so it's easy to say "the model is 66% sure it's a cat."

But what if the model's way too confident or not confident enough? Here comes temperature scaling!

Step-by-Step: Softmax for Humans

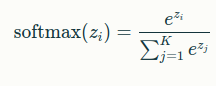

Step 1: The Math

Here:

-

zi is the input score (logit) for class i.

-

K is the total number of classes.

-

e^zi means we raise the constant e (about 2.718) to the power of zi.

-

The denominator sums all these exponentials for every class to normalize the values.

Take each score, raise it to the power of e (≈2.718).

Add all those up for every class.

Divide each "e-to-the-score" by the total, making probabilities that sum to 1.

Step 2: Try It in Python!

import numpy as np

def softmax(logits):

exp_scores = np.exp(logits)

return exp_scores / np.sum(exp_scores)

logits = np.array([2.0, 1.0, 0.1])

probs = softmax(logits)

print("Softmax probabilities:", probs)Output

Softmax probabilities: [0.65900114 0.24243297 0.09856589]Now you’ve got real, understandable chances!

Step 3: What is Temperature Scaling?

Sometimes, your model is way too confident (or not confident enough). Temperature scaling lets you dial "how sure" your AI sounds like a thermostat for probabilities!

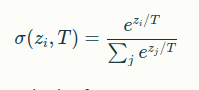

The new formula:

When T=1: it’s plain old softmax.

T>1: Softens the probabilities, making the model less confident!

T<1: Sharpens the distribution, making one answer nearly certain.

Try it in Python:

def softmax_with_temp(logits, T=1.0):

exp_scores = np.exp(logits / T)

return exp_scores / np.sum(exp_scores)

print("T=1.0:", softmax_with_temp(logits, T=1.0))

print("T=2.0 (softer):", softmax_with_temp(logits, T=2.0))

print("T=0.5 (sharper):", softmax_with_temp(logits, T=0.5))Output

T=1.0: [0.65900114 0.24243297 0.09856589]

T=2.0 (softer): [0.50168776 0.30428901 0.19402324]

T=0.5 (sharper): [0.86377712 0.11689952 0.01932336]You'll see how changing T changes the confidence levels!

Practical Applications

- AI Classification: Softmax is the final step in image/text classifiers your AI can tell you how likely each answer is!

- Calibrating Confidence: Use temperature scaling to make models less over- (or under-) confident, making them safer for real-world use in medicine, self-driving, or banking.

- Knowledge Distillation: Teach smaller AIs using “soft” probabilities (high T) for better student learning.

- Model Security: Higher T can actually make models more robust against tricky data or even some types of attacks!

What I Learned

- Softmax turns raw model numbers into real, human understandable probabilities.

- Temperature scaling lets you control the "certainty" dial so your AI sounds smart, not arrogant.

- This combo is everywhere in deep learning, from simple classifiers to robust, trustworthy systems!

What’s Next

Derivatives and gradient decent