Hi Pythonistas!

Today, let's talk about Logistic Regression one of the most fundamental and widely used algorithms in machine learning,

especially for classification tasks.

What Is Logistic Regression?

Logistic regression is a supervised learning algorithm used for classification problems. logistic regression predicts the probability of an input belonging to a specific class. Most commonly, it's used for binary classification deciding between two categories, like Yes/No, Spam/Not-Spam, Positive/Negative.

How Does It Work?

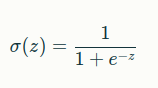

The model estimates the relationship between input features and the probability that the output belongs to a particular class.It uses the sigmoid function (also called logistic function) to convert any real-valued number into a value between 0 and 1, which can be interpreted as a probability.

The sigmoid function looks like this:

Where z is the weighted sum of the input features.

Key Points:

- The output of logistic regression is always between 0 and 1, making it perfect for modeling probabilities.

- By choosing a threshold (commonly 0.5), we classify the output: If probability > 0.5 → Class 1 Else → Class 0

- It finds the best weights for your input features by maximizing the likelihood of the observed data, through an optimization process.

How logistic regression works internally

Step 1: Collect Information (Input Features)

The model starts with details about something you want to classify like size, color, or shape. These details are the features.

Step 2: Combine Details into a Score

The model multiplies each feature by how important it thinks that feature is (called weights).

It then adds them all up plus a special extra number called the bias.The bias helps the model shift the score up or down.This combined total is like the model’s overall score telling how strong the evidence is for one class or the other.

Step 3: Turn Score into a Confidence Number

The model uses a special curve called the sigmoid function to change this score into a number between 0 and 1. This number represents the confidence or probability that the input is in the positive class.

Step 4: Make a Decision

If this confidence is more than halfway (usually 0.5), the model says "Yes" for class 1. Otherwise, it says "No."

Step 5: Check How Right or Wrong the Model Is

The model compares its guesses to the true answers and calculates a number (called loss) that shows how much it’s wrong.

Step 6: Learn and Improve

The model changes the importance (weights) it gives to each feature and the bias to make fewer mistakes. It repeats these steps thousands of times until it gets really good at guessing.

Types of Logistic Regression

- Binary Logistic Regression: Two classes (e.g., Spam or Not Spam)

- Multinomial Logistic Regression: More than two classes, without order (e.g., Cat, Dog, Sheep)

- Ordinal Logistic Regression: More than two classes with an order (e.g., Low, Medium, High)

Why Learn Logistic Regression?

- Simple to implement and understand.

- Provides probabilities along with classifications.

- Works well as a baseline you can build on this with more complex models later.

- Widely used in many fields like finance, healthcare, marketing, and social sciences.

What I learned

- Logistic Regression is used for classification models. and mostly used for binary classification.

- Logistic Regression uses sigmoid function to convert number into probability

- Logistic Regression uses gradient decent techinic to adjust the wieght of the model

- Cross-entropy loss is a way to measure how different the model’s predicted probabilities are from the true answers.

Next Steps

Soon, you’ll build your own logistic regression model in Python to classify sentiments putting theory into practice!